According to social media analytics firm Graphika, “AI undressing” is becoming more popular.This technique makes use of generative artificial intelligence (AI) technologies that are carefully calibrated to remove clothing from user-provided photos.The amount of comments and posts on Reddit and X that included referral links to 34 websites and 52 Telegram channels that offered synthetic NCII services was measured by Graphika, according to its report. It totaled 1,280 in 2022 compared to over 32,100 so far this year, representing a 2,408% increase in volume year-over-year.

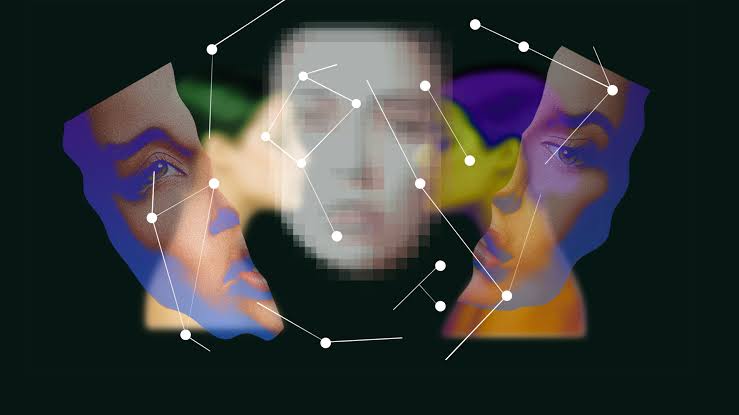

When artificial intelligence tools are used to produce Non-Consensual Intimate Images (NCII), typically sexual content is generated without the knowledge of the people portrayed. This practice is known as synthetic NCII services.These AI techniques, according to Graphika, make it simpler and more affordable for numerous providers to produce realistic explicit content at scale.In the absence of these providers, clients would have to handle the time-consuming and sometimes costly task of maintaining their own customized image diffusion models.Concerns including targeted harassment, sextortion, and the manufacturing of child sexual abuse material (CSAM) are raised by Graphika, which states that the growing use of AI undressing tools may result in the fabrication of explicit materials.

Artificial intelligence (AI) has been used to produce video deepfakes featuring the resemblance of celebrities, such as Tom Hanks and YouTuber Mr. Beast, although undressing AIs usually concentrate on images.

The Internet Watch Foundation (IWF), a UK-based internet watchdog organization, revealed in a different study from October that in just one month, it discovered over 20,254 photos of child abuse on a single dark web forum.The IWF issued a warning, saying that child pornography produced by AI might “overwhelm” the internet.The IWF warns that it is now harder to discern real photos from deepfake pornography due to advances in generative AI photography.Artificial intelligence-generated media, especially on social media, is a “serious and urgent” danger to information integrity, according to a June 12 assessment from the UN.On Friday, December 8, the European Parliament and Council negotiators reached a consensus on the regulations governing AI use within the EU.